Sign Language Images Dataset

The Sign Language MNIST data came from greatly extending the small number (1704) of the color images included as not cropped around the hand region of interest. Dataset Card for ASL-MNIST This is a FiftyOne dataset with 34,627 samples of American Sign Language (ASL) alphabet images, converted from the original Kaggle Sign Language MNIST dataset into a format optimized for computer vision workflows. Installation If you haven't already, install FiftyOne.

In the US, approximately around 500,000 people use American sign language (ASL) as primary means of communication. This real-life large-scale sign language dataset comprising of over 25,000 annotated videos and evaluated with state. This dataset contains 180,717 images of sign language gestures, including 41 static gestures and 95 dynamic gestures.

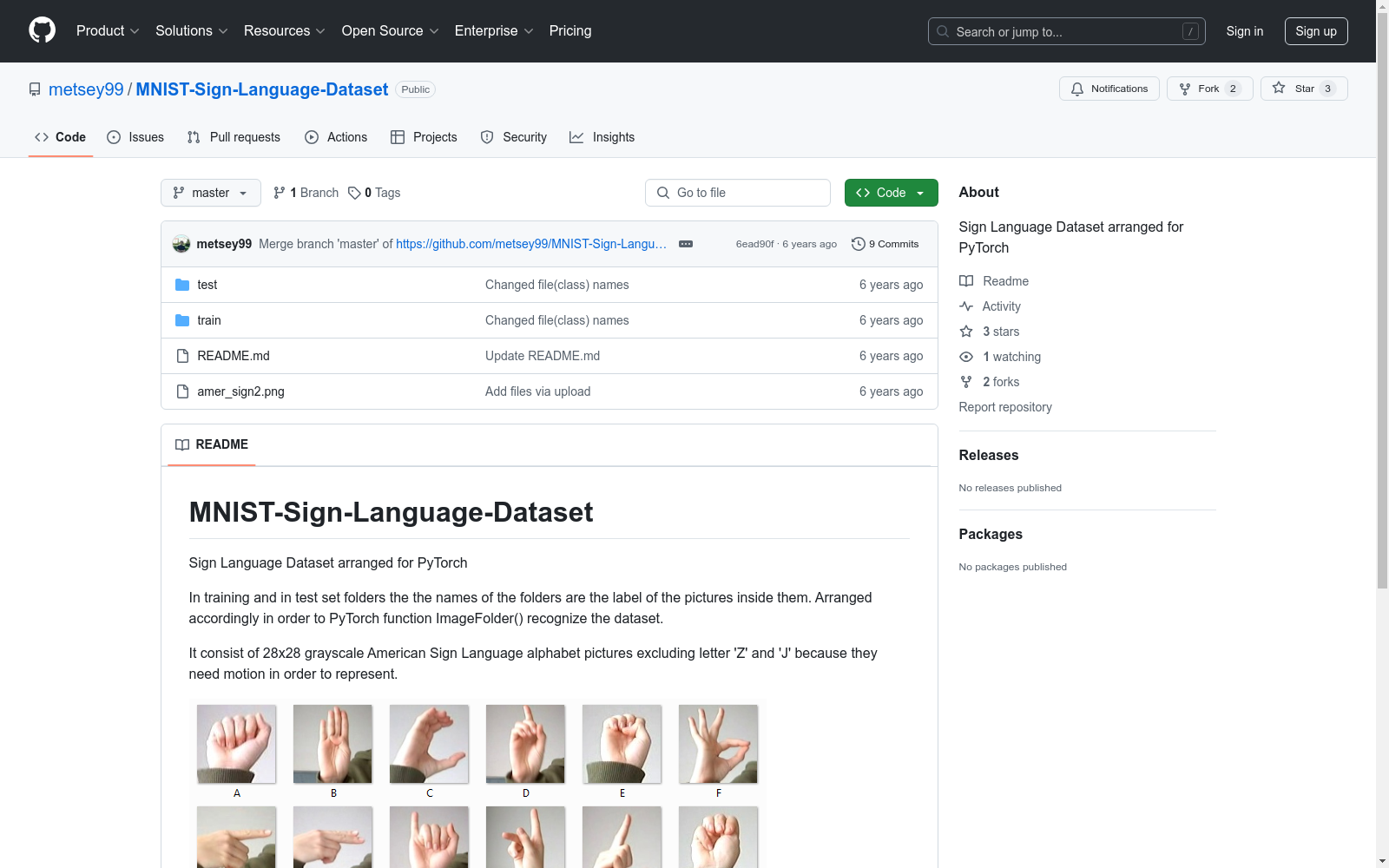

Some samples of ASL Alphabet and Sign Language Digits dataset. | Download Scientific Diagram

The data was captured from multiple scenes, multiple photographic angles and multiple light conditions. In terms of data annotation, each gesture is annotated with 21 landmarks, gesture types and attributes. This dataset can be used for tasks such as AI.

A Dataset for Irish Sign Language Recognition 2017, Oliveira et al. A comparison between end-to-end approaches and feature extraction based approaches for Sign Language recognition 2017, Oliveira et al. Our dataset contains a series of different folders containing different format of images, like the root images, augmented images, preprocessed images (grayscale, histogram equalization, binarized images), and skeleton mediapipe landmark images for various classes of the alphabets.

American Sign Language Dataset | IEEE DataPort

Each folder is divided into two types of folders, each containing a different set of images which were segregated. The dataset's focus on high-resolution hand gesture images provides a strong foundation for static ASL recognition, with potential extensions to incorporate facial expressions and body postures via cross-modal fusion, enabling comprehensive sign language recognition systems 2. Background The existing limitations in sign language recognition datasets introduce crucial challenges to.

This dataset comprises 26,000 images representing American Sign Language (ASL) hand gestures corresponding to the English alphabet (A-Z). The dataset is organized into 26 folders, each labeled with a corresponding letter, containing multiple image samples to ensure variability in hand positioning, lighting, and individual hand differences. The images capture diverse hand gestures, making the.

Sign Language MNIST | Kaggle

Description: The Signclusive Mediapipe dataset is a comprehensive collection designed for the development and training of machine learning models in recognizing sign language. This dataset encompasses images representing the 26 letters of the English alphabet, as well as the "space" sign, making a total of 27 distinct classes. Each class is represented by images from five different signers.

This is a dataset of images containing American Sign Language (ASL). Each directory has more than 80 photos. Images were taken from students at UTEC (University of Engineering and Technology, Peru).

This dataset was developed to implement a sign language translator. The project was based on digital image processing techniques and implemented in.